I am currently working on a project called Peer Feedback, where we are trying to build a nice peer feedback system for college students. We use Canvas Learning Management System (CanvasLMS) API as the data source for our application. All data about the students, courses, assignments, submissions are all fetched from CanvasLMS. The application is written in Python Flask.

Current Setup

We are mostly getting data from API, realying it to frontend or storing it in the DB. So most of our testing is just mocking network calls and asserting response codes. There are only a few functions that contain original logic. So our test suite is focused on those functions and endpoints for the most part.

We recently ran into a situation where we needed to test something that involved the fetching and filtering data from API and retriving data from DB based on the result.

Faker Library and issues

The problem we ran into is, we can’t test the function without first initalizing the database. The code we had for initializing the CanvaLMS used the Faker library, which provides nice fake data to create real world feel for us. But it came with its own set of problems:

Painful Manual Testing

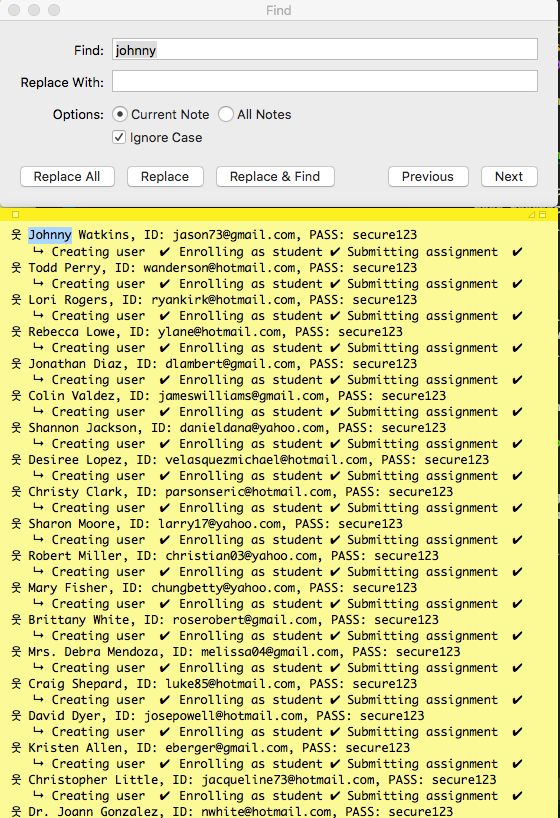

While we had the feel of testing realworld information, it came with real world problems. For e.g., I cannot log in as a user without first looking up the username in the output generated during initialization. So I had to maintian a postit on my desktop, use search functionality to find the user I want to test and copy his email and login with it.

Inconsistency across test cycles

When we write our tests, there is no assurity that we could reference a particular user in the test with the id and expect the parameters like email or user name to match. With the test data being generated with different fake data, any referencing or associaation of values held true only for that cycle. For e.g, a function called get_user_by_email couldn’t be tested because we didn’t know what to expect in the resulting user object.

Complex Test Suite

To compensate for the inconsistency in the data across cycles, we increased the complexity of the test suite, we saved test data in JSON files and used it for validation. It became a multi step process and almost an application on its own. For e.g, the get_user_by_email function would first initialize the DB, then read a json file containing the test data and get a user with email and validate the function, then find the user without an email and validate it throws the right error, find the user with a malformed email… you get the idea. The test function itself not had enough logic warranting a test of test suites.

Realworld problems

With the realworld like data came the realworld problems. The emails generated by faker are not really fake. There is a high chance a number of them are used by real people. So guess what would have happened when we decided to test our email program :)

Sensible Test Data

We finally are switching to a more sensible test data for our testing. We are dropping the faker data for user generation, and shifiting to the sequencial user generation system with usernames user001 and emails [email protected]. This solves the above mentioned issues:

- Now I can login without having to first look it up in a table. All I need to do is append a integer to the word user

- I can be sure that

user001would have the email[email protected]and that these associations will be consistent across test cycles. - I no longer have to read a JSON file to get a user object and test related information. I can simply pick one using the

userXXXtemplate, reducing complexity of the test suite. - And we won’t be getting emails for random people asking us to remove them from mailing lists and probably we are saving ourselves being blacklisted as a spam domain.

Conclusion

Faker provided us with data which helped us test a number of things in the frontend, like the different length in names, multi part names, unique names for testing filtering and searching etc., while also added a set of problems that makes our work difficult and slow.

Our solution to having a sensible dataset for testing is plain numerically squenced dataset.